Mastering Lead Generation for Real Estate: Proven Strategies for Agents

Master lead generation for real estate with proven strategies. Learn digital tactics, advanced methods, and optimization for agents & brokers.

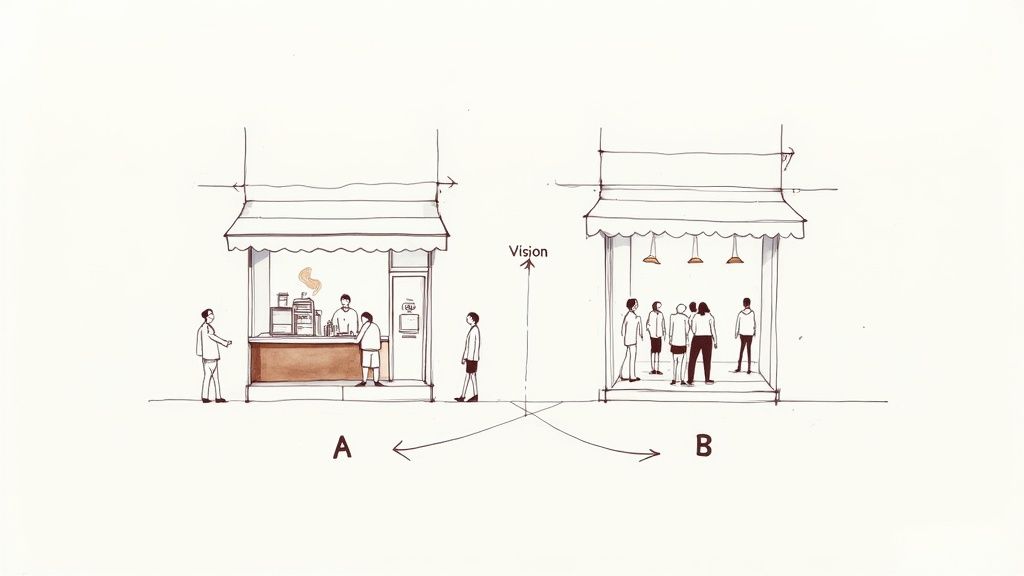

Ever walked past a coffee shop handing out two different free samples—one hot, one iced—just to see which one convinces more people to step inside? That's A/B testing in a nutshell. It's a simple, powerful way to compare two versions of something to see which one works better, letting real customer behavior give you the answer.

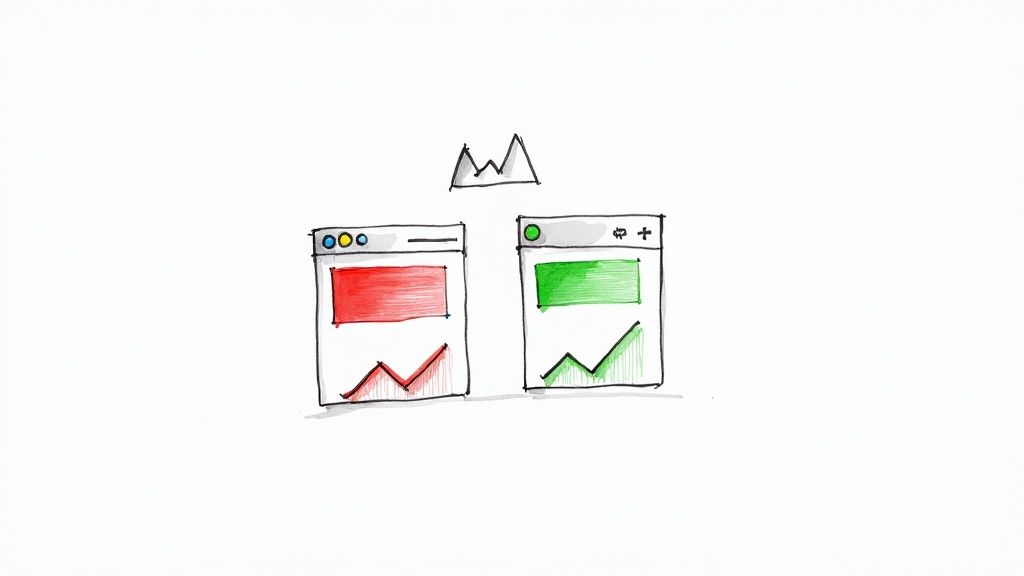

Think of A/B testing as a straightforward scientific experiment for your marketing. You start with what you already have—your original webpage, ad, or email. This is your control, or Version A.

Next, you create a new version with one, and only one, specific change. Maybe you swap out the headline, change the button color, or try a different image. This new version is your variant, or Version B.

Then, you show Version A to one group of your audience and Version B to another, completely at random. By tracking how each group responds, you collect hard data on which version is more effective at getting you the results you want. This simple process takes the guesswork out of marketing. No more "I think this will work." Now you know.

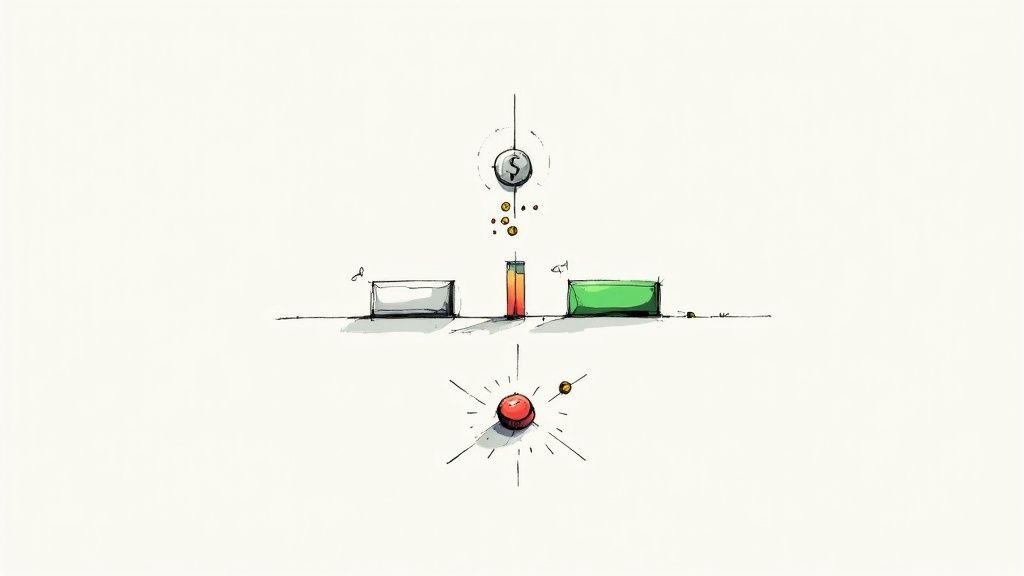

To keep things clear, here’s a quick breakdown of the essential parts of any A/B test.

These components are the building blocks for every test you'll run.

Marketing can often feel like a guessing game. A/B testing flips that on its head. Instead of relying on gut feelings, you can systematically improve every piece of your marketing, one test at a time. Each experiment gives you a peek into your customers' minds, helping you fine-tune their entire journey with your brand.

By truly understanding what works, you can start to see real results:

It’s all about making small, smart improvements that add up to big wins. Each test helps you understand your customers better, which is key to optimizing every stage of your conversion funnel.

A/B testing, often called split testing, is a standard practice. In fact, around 77% of companies are now running A/B tests on their websites, proving how essential it's become for businesses of all sizes.

Let's be honest: marketing can often feel like a game of chance. You launch a campaign based on a hunch, cross your fingers, and hope for the best. A/B testing flips that script, turning guesswork into a science of predictable growth.

Instead of just thinking you know what works, you get hard evidence to back up every decision. This data-driven approach directly impacts your bottom line by improving user engagement and boosting conversions. It also takes the risk out of big changes, letting you test an idea with a small slice of your audience before committing your entire budget.

The real magic of A/B testing is seeing how tiny tweaks can lead to massive results. You'd be amazed how something as simple as changing a button's color can have a huge financial impact when scaled across thousands of visitors.

Big tech companies live by this rule. In one famous case, Google tested 41 different shades of blue for its ad links to find the one that got the most clicks. That single change reportedly boosted their annual revenue by $200 million. Stories like this prove that no detail is too small to test.

At its core, A/B testing gives you clarity. It cuts through the noise of opinions and delivers objective data, showing you the clearest path to better results and a higher return on investment.

Of course, A/B testing works best when it's part of a bigger picture. To get the most out of your experiments, it's worth learning about other powerful conversion rate optimization techniques that can complement your efforts. Ultimately, this methodical approach is what separates the most successful marketers from those making expensive guesses. By understanding what is a/b testing in marketing, you're not just learning a new tactic—you're adopting a mindset of continuous, measurable improvement.

Ready to get your hands dirty? Running your first A/B test is more straightforward than it sounds. Think of it less like a complex scientific experiment and more like following a simple recipe.

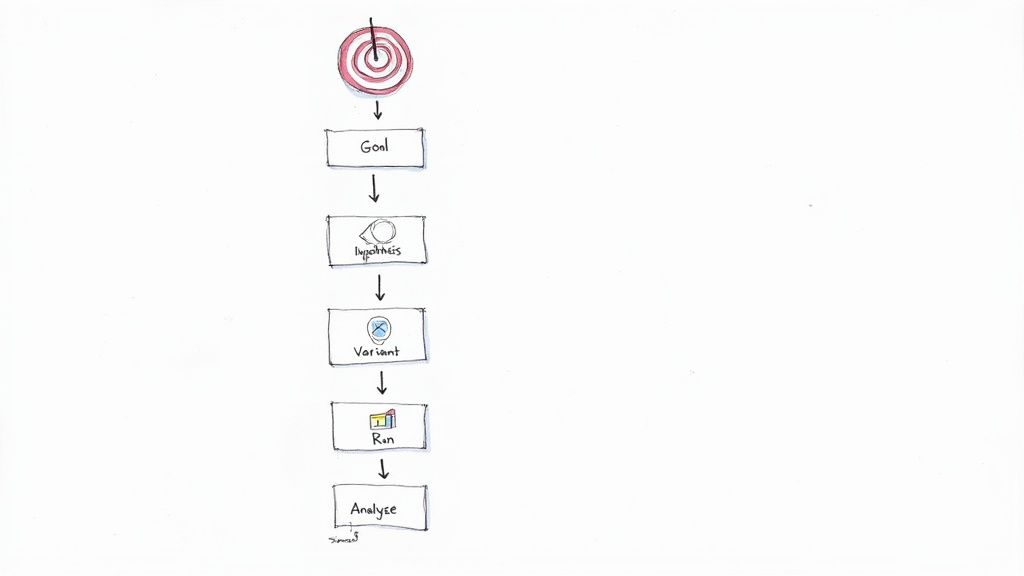

The whole thing starts with a single, clear goal. What do you actually want to improve? Maybe you want more people to sign up for your newsletter, or perhaps you want to get more clicks on a specific product page. Whatever it is, define it first.

Once you know what you're aiming for, you can get down to the nitty-gritty.

This is just a fancy way of saying, "make an educated guess." Your hypothesis is a simple statement that frames your test. It usually follows an "If I do X, then Y will happen" format.

For example, a solid hypothesis could be: "Changing the call-to-action button text from 'Submit' to 'Get Your Free Guide' will increase newsletter sign-ups." This gives your test a clear purpose. You know exactly what you're changing and what you expect to see.

Now for the fun part. You'll create your variant (Version B), which is just your page with that one, single change. And I mean one change. If you change both the button text and the button color, you’ll have no idea which one actually made a difference.

With your original control (Version A) and your new variant ready, it's time to let an A/B testing tool do the heavy lifting. The tool will randomly show Version A to half of your visitors and Version B to the other half. It’s a clean split. As you get deeper into this, our guide on conversion rate optimization best practices can give you even more ideas.

The final step is to check the results. Dive into the data and see which version won. To be truly confident in your conclusion, you need to reach statistical significance—a term that confirms your results weren't just a fluke.

Ready to get started? You don't need a massive, complex plan to start A/B testing. Some of the most powerful tests are also the simplest. It's all about making small, focused changes that can deliver surprisingly big results. The trick is to always start with a clear hypothesis.

A great place to begin is with the elements that directly guide your customers' actions. Your Call to Action (CTA) is a prime example—it’s one of the most common and impactful things to test because it has a direct line to your conversion rates. If you're looking to get the most out of your experiments, it helps to understand how to optimize your Call to Action (CTA) first.

To give you a better idea of what to test, here's a table with some common marketing elements and simple variations you could try.

This is just scratching the surface. The key is to isolate a single element and see how a change impacts user behavior.

If you run an online store, you know that even the smallest friction can lead to an abandoned cart. Your goal should be to make the journey from browsing to buying as smooth as possible.

For service-based businesses, your landing page has to do some heavy lifting. It needs to build trust and persuade visitors to get in touch. Here, your tests should focus on clarity and making a compelling case.

Your hypothesis could be something as straightforward as: “Changing our headline from a feature-focused statement to a benefit-focused question will increase form submissions by 15%.”

A simple, clear hypothesis like that gives your test a purpose and tells you exactly what success looks like. Once you have a winning headline, you can move on to other page elements. For a closer look at more advanced tactics, our guide on how to increase website conversions is a great next step.

Running a great A/B test is as much about dodging common pitfalls as it is about having a brilliant idea. A few simple mistakes can make your results unreliable and waste all your effort.

The most common error is changing too many things at once. If you tweak the headline, swap the image, and change the button color all in one go, you'll never know which change actually made a difference.

The fix is simple: stick to the one-variable rule. Only test one element at a time. This is how you isolate what’s causing the change and prove a direct cause-and-effect relationship.

Another huge mistake is pulling the plug on a test too soon. You might see your new version shoot ahead on day one and be tempted to declare victory. But that early data is often just noise from a small sample size—it's not statistically significant.

Patience is your best friend here. You need to let a test run long enough to gather a meaningful amount of data. As a rule of thumb, wait for at least a few hundred conversions for each version before calling a winner.

Finally, don't forget the world outside your test. Did you run your test during a huge holiday sale, a Black Friday rush, or right after a post went viral? Any of these events can send unusual traffic your way and skew your results. To truly understand what is a/b testing in marketing and get it right, you have to control these variables.

Even after you wrap your head around what A/B testing in marketing really means, a few questions tend to resurface. Clearing them up early keeps your experiments on track.

One of the most common concerns is traffic volume. How much do you need to see meaningful changes? While there’s no one-size-fits-all answer, most practitioners recommend at least 1,000 visitors and a few hundred conversions per variation. Skimp on numbers and you risk chasing random fluctuations instead of real insights.

Think of A/B testing as a head-to-head matchup—two versions duking it out to crown a clear winner. On a landing page, that might mean swapping headlines or button text.

Multivariate testing is more like a bracket tournament. You’re mixing and matching, say, a new headline, an image swap, and a different button color all at once. The result? You see which combination shines brightest, but it demands much more traffic and complexity.

Key Takeaway: For most teams dipping their toes into experimentation, A/B tests deliver quick, straightforward wins. You’ll need fewer visitors and walk away with clear, actionable insights.

Once results land, you have three clear paths:

At BrandBooster.ai, we follow a data-first approach to remove guesswork from your strategy. Our outcome-driven solutions pair every tweak with solid evidence, so you can push growth forward. Discover more at https://www.brandbooster.ai.